OVERVIEW

Thank you in advance for your efforts as a submission reviewer for KubeCon + CloudNativeCon Europe.

Below you will find guides to Sessionize’s evaluation modes in addition to best practices to use when reviewing your set of proposals. Please bookmark this page for easy reference. If you have any questions, please email the CNCF Content Team.

By participating as a reviewer, you agree to the CNCF Program Committee and Events Volunteer Guidelines.

Program Committee Responsibilities

CNCF ambassadors and maintainers across various projects are reached out to apply for the Program Committee. To ensure diversity in the committee and mitigate bias, the PC members are carefully selected by the program co-chairs taking into account various factors, like their specific interest and domain expertise. Members are also selected from a diverse range of backgrounds, considering gender diversity, geodiversity, company diversity and project diversity.

Program Committee members carefully evaluate 75-125 proposals in their assigned topic for each event. They are limited to participating in a maximum of two events per year.

Program Committee Benefits

To say thank you for your hard work, we are offering reviewers a tiered registration discount system:

Registration codes will be sent within a week after schedule announcement and will include your online Cloud Native Store Gift Card discount code. Please reach out to speakers@cncf.io if you have any questions regarding the benefits listed above.

Track Chair Responsibilities

For each track, two Track Chairs are chosen from the applicants for the Program Committee, with a preference for subject matter experts and those who have previous PC experience.

Track Chairs are not part of the program committee, enabling a fresh and unbiased perspective during the evaluation process. They are limited to participating as a track chair in one event per year.

Track Chair Benefits

To say thank you for your hard work, we are offering Track Chairs:

Registration codes will be sent within a week after schedule announcement and will include your online Cloud Native Store Gift Card discount code. Please reach out to speakers@cncf.io if you have any questions regarding the benefits listed above.

STAR RATING EVALUATION MODE

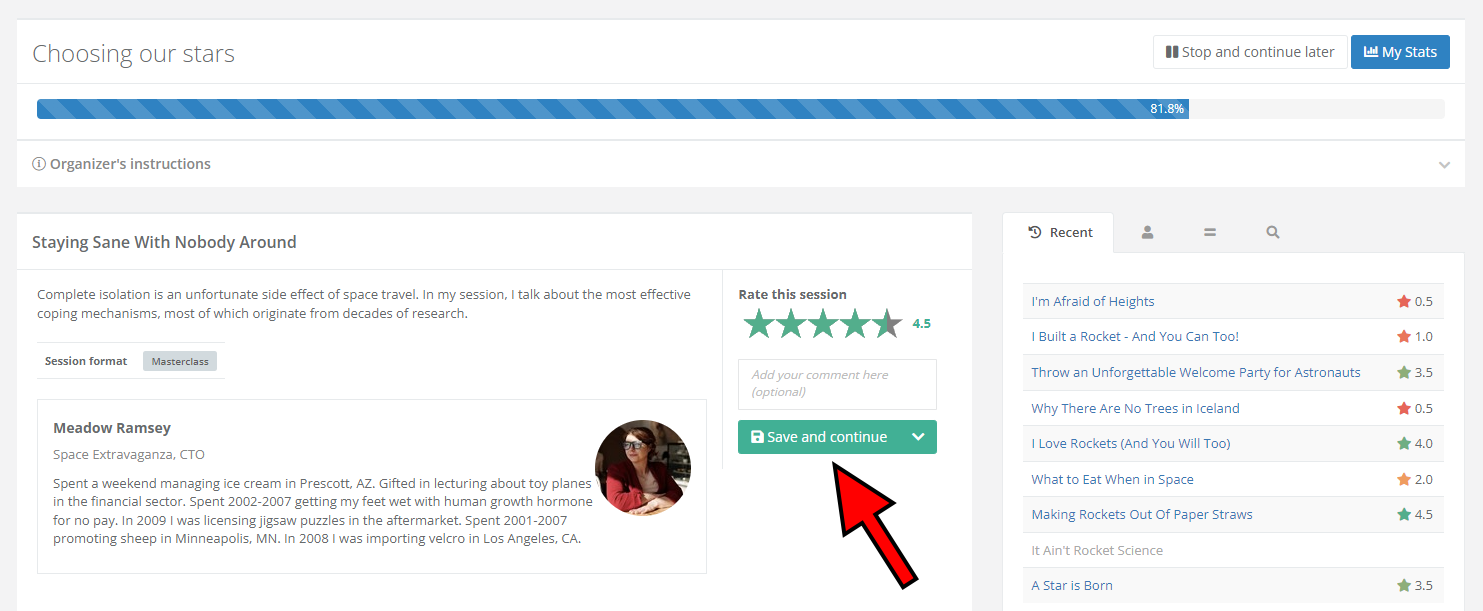

The Star rating evaluation mode is highly user-friendly, requiring only an examination of the session information and a rating between one to five stars. Additionally, half-star ratings (0.5, 1.5, 2.5, 3.5, 4.5) are also available for use. Upon completion, simply click the Save and Continue button to confirm your rating and proceed to the next session.

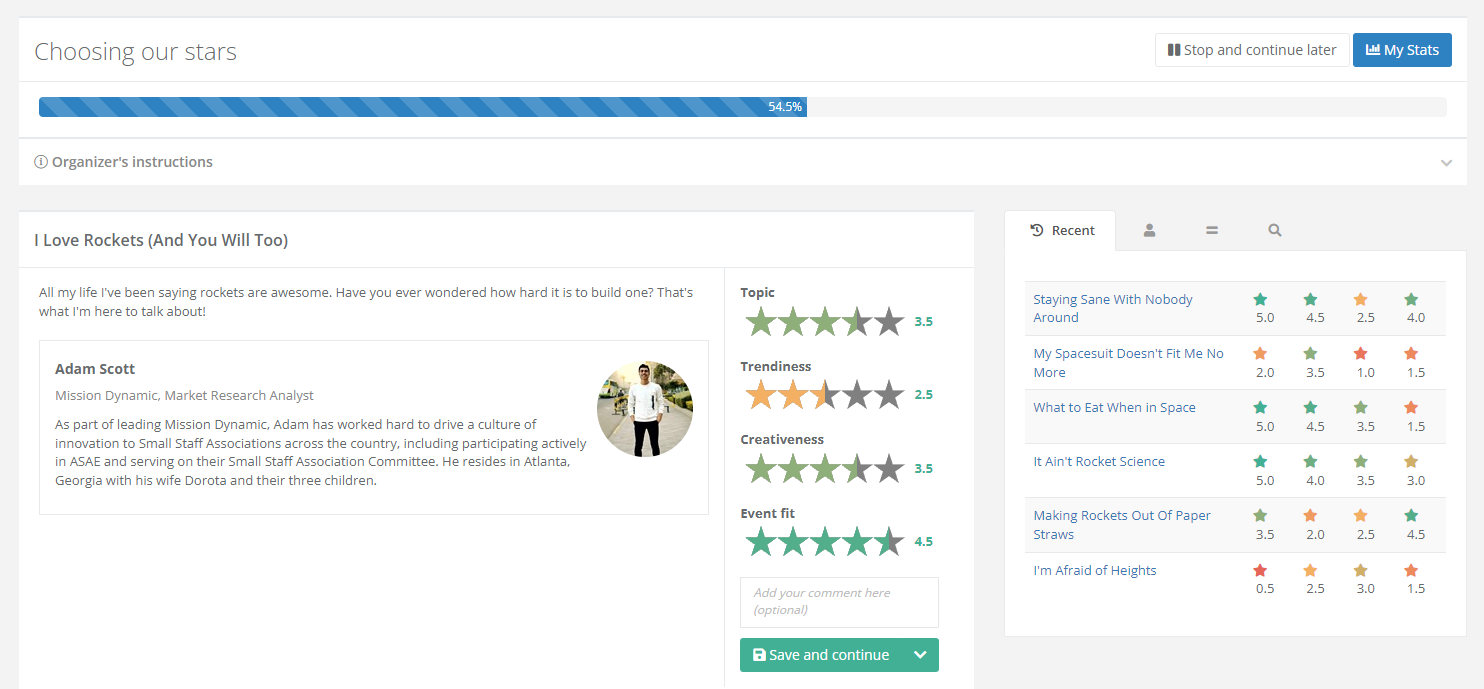

KubeCon + CloudNativeCon utilizes a Stars rating evaluation plan that involves multiple criteria. As an evaluator, you will be required to assess sessions based on the following four criteria, as opposed to assigning a singular overall rating:

In addition, it is mandatory to provide feedback in the form of a comment for each session. It is important to ensure that feedback is constructive, especially for rejected proposals, as submitting authors may range from a VP at a large company to a university student. Constructive feedback may include highlighting the positive aspects of a proposal, offering helpful suggestions, and providing factual feedback.

It is crucial to avoid direct attacks and instead focus on objective feedback that can help improve the proposal. Moreover, we strongly advise against using vague comments like “Scoring was tough, I had to cut this” or “LGTM.” Instead, it is essential to provide thoughtful and insightful comments that will assist the co-chairs in making their final selections.

Once you have finished rating a session based on the criteria and provided constructive feedback, click on Save and continue to proceed to the next session. Please note that if your comments are deemed unconstructive, you may not be invited to serve as a program committee member in the future.

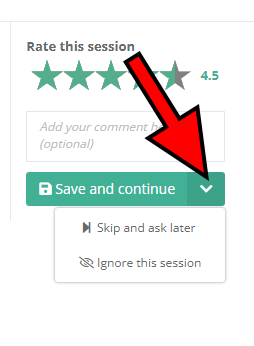

If you have a hard time coming up with a decision about a certain session, you have the option to skip it and come back to it later. Simply click on the arrow flanking the Save and continue button to expand the appropriate menu and select the Skip and ask later option. This can be particularly useful if you have only just started with the evaluation process and would like to get a better sense of the overall quality of the submitted sessions.

In case a certain session covers a subject you’re completely unfamiliar with or poses a conflict of interest, click on the Ignore this session button. The evaluation system won’t ask you about that session anymore.

Track your progress

During the evaluation process, a progress bar will be displayed at the top of the page, providing an indication of your progress. If, at any point, you need to pause the evaluation process, click on the Stop and continue later button located above the progress bar. Upon returning, you can resume the evaluation from where you left off.

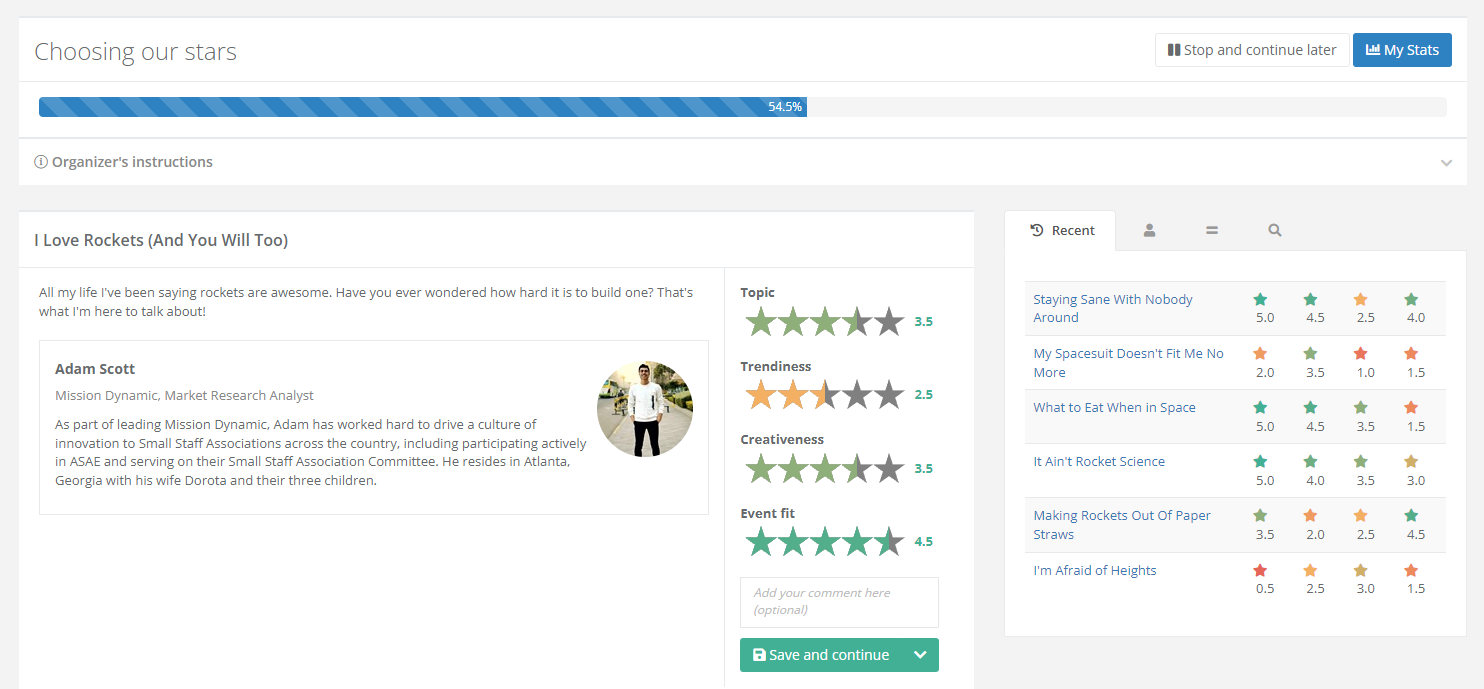

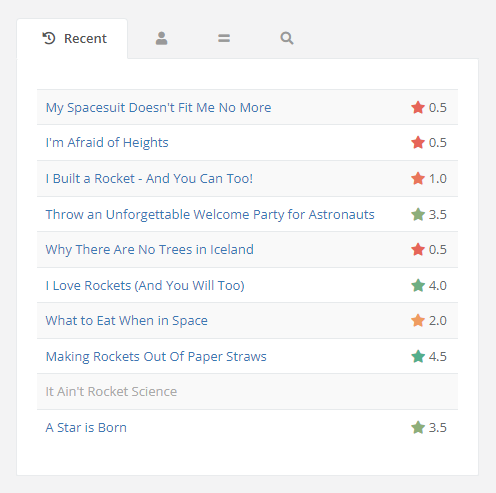

On the right is a box with several useful tabs. The default tab is Recent. You can use it to keep track of your past session evaluations, but it has an additional purpose: you can click on any of the sessions to reopen them and potentially change your evaluation.

Here’s a complete overview of the tabs found in the aforementioned box:

- Recent – a list of sessions you recently evaluated

- Speaker – see other sessions submitted by the same speaker (assuming they exist)

- Similar – browse similar sessions

- Search – look through all nominated sessions

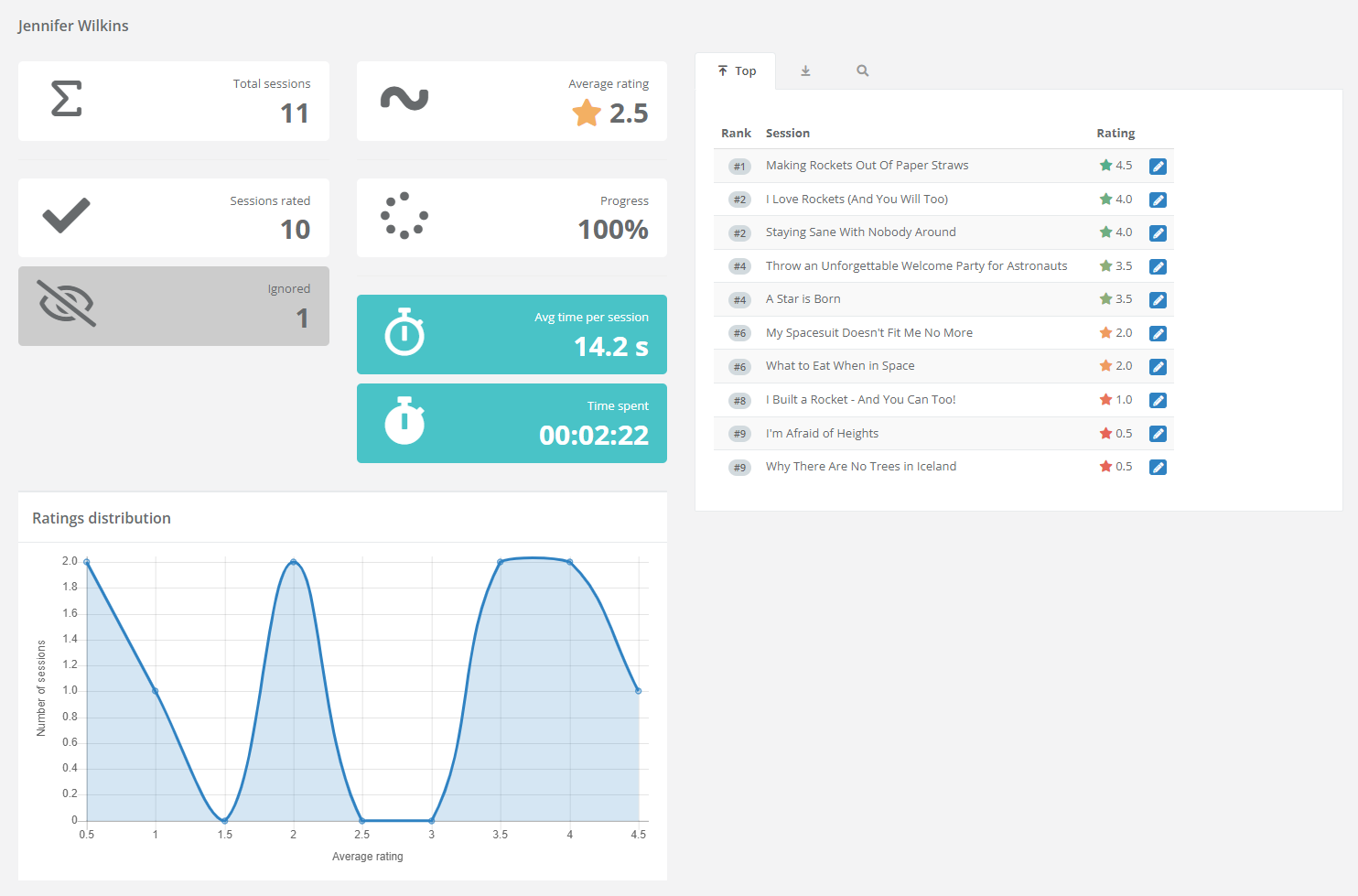

Complete the evaluation and view your stats

Once you’re done with the evaluation, you’ll automatically be redirected to the Evaluation page. By opening the evaluation plan you’ve just completed, you can view your statistics, as well as potentially change your mind on any of the sessions by clicking on the corresponding edit button.

Your scores will be combined with other members in your review track and the top 30% will be moved to the next stage of evaluation.

CNCF Program Committee + Event Volunteer Guidelines

Volunteers who help with the planning, organization, or production of a CNCF event are often seen as representatives of the CNCF community or CNCF project that the event relates to, and their actions can meaningfully impact participants’ experience and perception of the event. Therefore, and in the interest of fostering an open, positive, and welcoming environment for our community, it’s important that all event volunteers hold themselves to a high standard of professional conduct as described below.

These guidelines apply to a volunteer’s conduct and statements that relate to or could have an impact on any CNCF event that the volunteer helps plan, organize, select speakers for, or otherwise serve as a volunteer for. These guidelines apply to relevant conduct occurring before, during, and after the event, both within community spaces and outside such spaces (including statements from personal social media accounts), and to both virtual and physical events. In addition to these guidelines, event volunteers must also comply with The Linux Foundation Event Code of Conduct and the CNCF Code of Conduct.

Be professional and courteous

Event volunteers will:

Express feedback constructively, not destructively

The manner in which event volunteers communicate can have a large impact personally and professionally on others in the community. Event volunteers should strive to provide feedback or criticism relating to the event or any person or organization’s participation in the event in a constructive manner that supports others in learning, growing, and improving (e.g., offering suggestions for improvement). Event volunteers should avoid providing feedback in a destructive or demeaning manner (e.g., insulting or publicly shaming someone for their mistakes).

Be considerate when choosing communication channels

Event volunteers should be considerate in choosing channels for communicating feedback. Positive or neutral feedback may be communicated in any channel or medium. In contrast, criticism about any individual event participant, staff member, or volunteer should be communicated in one or more private channels (rather than publicly) to avoid causing unnecessary embarrassment. Criticism about an event that is not about specific individuals may be expressed privately or publicly, so long as it is expressed in a respectful, considerate, and professional manner.

Treat sensitive data confidentially and with respect

Event volunteers may have access to details about proposed or accepted speakers and the contents of their talks. They are required to adhere to The Linux Foundation’s guidelines regarding use of this information and may only use it for the purpose of choosing talks for an event. They are prohibited from using this data for any other purpose, including but not limited to the following:

Changes to These Guidelines and Consequences for Noncompliance

The event organizers may update these guidelines from time to time, and will notify volunteers by email and via the CNCF Slack channels designated for event volunteers. However, any changes to these guidelines will not apply retroactively. If the Linux Foundation Events team determines that a volunteer has violated these guidelines or The Linux Foundation Event Code of Conduct, it may result in the volunteer’s immediate suspension or removal from any event-related volunteer positions they hold, including participation in event-related committees. If these guidelines are updated and a volunteer does not wish to agree, their participation in the event-related volunteer position will cease until such time as they do agree.

CONTACT US

If you require any assistance reviewing proposals or have questions about the review process or any of the best practices we have suggested, please contact us for assistance.